It’s been a couple of months now since Apple’s AR-enhanced iPad Pro has been on the market, but only a few AR apps have taken advantage of the device’s new lidar depth sensor. The rear sensor may be a glimpse of technology that will appear in future iPhones, so early iPad Pro apps could provide insight into what Apple will do next.

About Occipital

Occipital’s new house-scanning app, Canvas, maybe the clearest indicator of what’s to come for the next wave of depth-enhanced apps. Occipital is a Boulder, Colorado-based company that’s been making depth-sensing camera hardware and apps for years, close to what Google’s early Tango tablets and phones had.

When combined with image capture, Occipital’s Structure camera could measure depth in a room and create a 3D-mapped mesh of a space. Companies like 6D.ai have worked out ways to calculate and map space without the use of any additional depth-sensing hardware in the years since. Occipital’s scanning capabilities have switched to a software-based approach since then.

Also Read: How to Run your Business from your iPad

About Canvas App

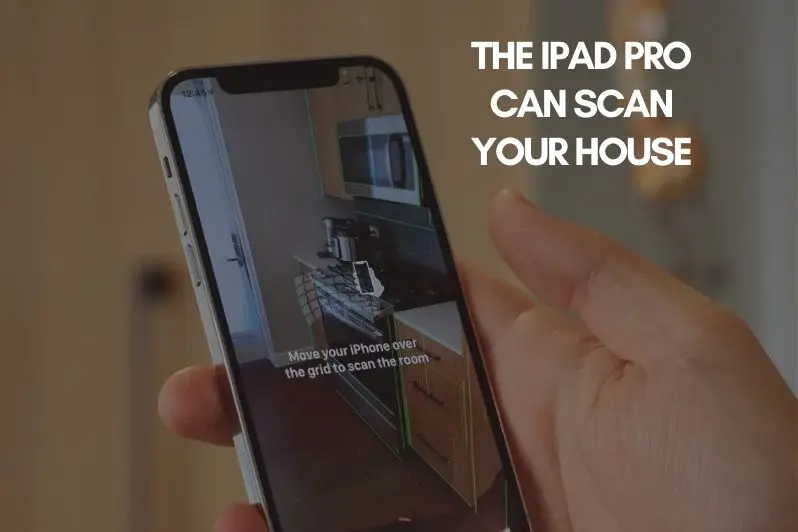

The new Canvas app from Occipital uses standard iPhone and iPad cameras to easily combine and map rooms. However, because of its increased depth-sensing range, the company is also adding support for Apple’s iPad Pro lidar sensor. The business sees the additional depth data as complementing its existing resources.

Since the 2020 iPad Pro models are a small subset of Apple’s AR-ready products, few developers may have singled out the iPad Pro’s unique lidar depth sensors. As depth-sensing technology spreads across the line to phones and other iPads, other companies may follow Occipital’s lead.

Room scans can seem shabby at first, but the data can be improved over time, and scans may also be transformed into CAD models for use by skilled designers.

The lidar on the iPad Pro isn’t a camera: it’s a discrete camera-free sensor that gathers depth maps in the form of clusters of points in space that form a grid or 3D mapping. Occipital’s app can also stretch images into a virtual 3D mesh using camera data, but the processes are different. Future AR cameras, on the other hand, might be able to better integrate the two features.

One can see the appeal of searching their home’s rooms to get analysis and expert opinions remotely like someone who is always living at home and refusing home improvement visits. Apple is set to announce more significant improvements to its AR tools at its remote WWDC developer conference soon, but expanding the lidar sensor’s functionality and how it communicates with camera data might be the next move for iPhones.

To learn more about renting iPads for commercial use where AR based applications please get in touch with one of our rental experts for global deployment of iPads which Occipital.